Understanding ComfyUI's Core: LDM Logic Made Simple

Hi everyone! We know ComfyUI works like building blocks (nodes) for flexibility. Today we'll uncover its secret engine—LDM. Don't be intimidated! Grasping this will make node connections click: "Aha! That's why!"

Why "Stable Diffusion"?

Simple: developed by Stability AI (like Photoshop by Adobe).

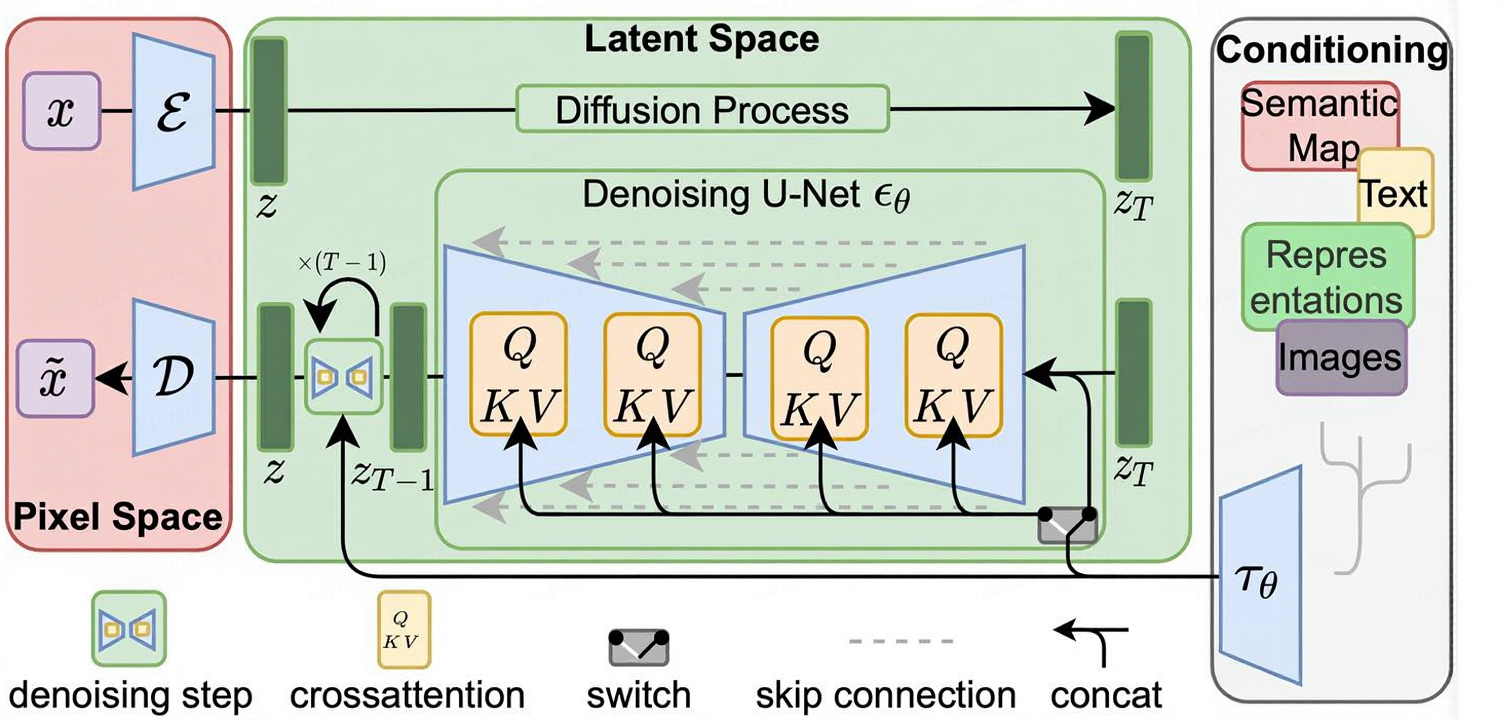

What is LDM? How Does It Work?

LDM (Latent Diffusion Model) is Stable Diffusion's core engine. Its key concept: Latent Space.

Imagine a Backstage Workshop:

Generating HD images requires massive pixel processing—slow and memory-heavy.

LDM's smart solution: Operate mainly in Latent Space—a compressed "sketchpad" where images become compact vectors (latent variables).

Simplified Workflow (Text-to-Image Example):

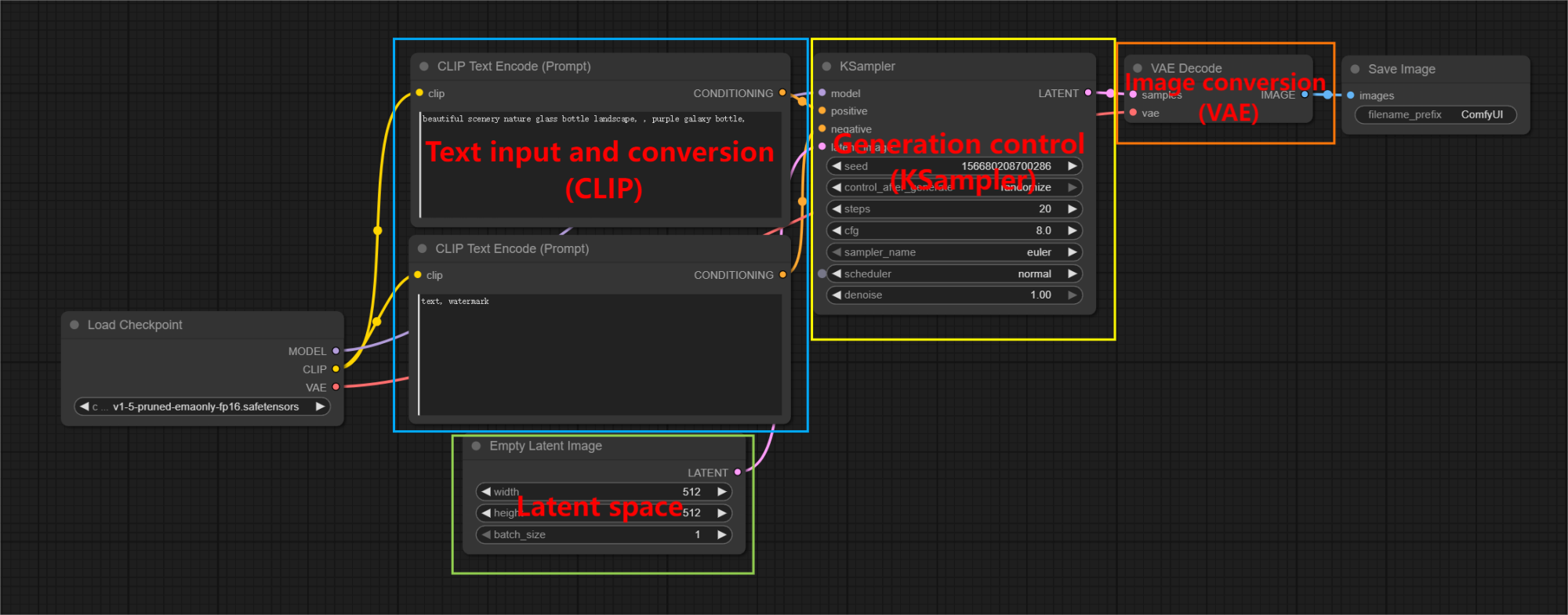

Input & Conversion (Prep):

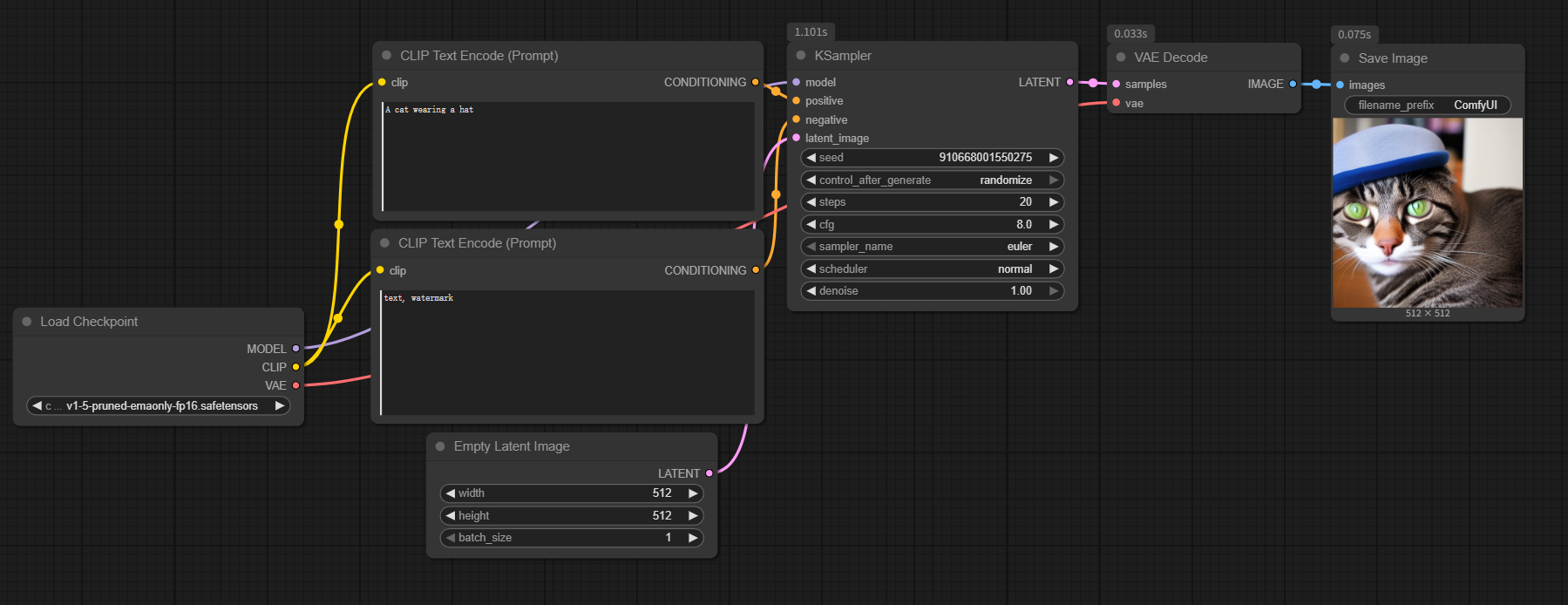

- Text prompts (e.g., "a cat wearing a hat") convert to machine "code" via CLIP.

- For image inputs: VAE encoder compresses images into latent format.

Core Creation (In Latent Space):

- Processed inputs enter latent space.

- Samplers (e.g., KSampler) remove noise here—guided by your prompts—to create a "compressed draft." Settings like step count control refinement.

Key benefit: Less VRAM usage, faster speeds.

Output & Conversion (Final Image):

- VAE decoder translates latent "drafts" into viewable images (JPG/PNG).

Why This Helps in ComfyUI:

- Node Connections Make Sense:

CLIP Text Encode (step 1) → KSampler (step 2) → VAE Decode (step 3). The logic chain becomes clear.

Latent Space Awareness Explains:

- Low VRAM needs (≥3GB): Compressed data = lower demands.

- Parameter adjustments: Control latent-space details.

Practical Value:

Understanding fundamentals lets you:

- Tune parameters purposefully, not blindly.

- Adapt faster to new models (e.g., SDXL still uses LDM core).

ComfyUI & LDM: Perfect Match

Each node mirrors an LDM stage—CLIP for text, VAE for images, KSampler for generation. This alignment powers ComfyUI's flexibility.

Key Takeaways:

- LDM = Stable Diffusion's engine (works in compressed latent space).

- CLIP: Converts text to machine "code."

- VAE: Encodes/decodes images ↔ latent space.

- KSampler: Crafts images in latent space.

Grasp this, and ComfyUI's node logic unfolds! Think of latent space as your "compressed workshop"—abstract at first, but intuitive with practice.

Unlock Full-Powered AI Creation!

Experience ComfyUI online instantly:

https://market.cephalon.ai/share/register-landing?invite_id=RS3EwW

Join our global creator community:

https://discord.gg/MSEkCDfNSW

Collaborate with creators worldwide & get real-time admin support.